Need estimation?

Leave your contacts and get clear and realistic estimations in the next 24 hours.

Table of contentS

Given the current buzz around AI, it's only natural for us to dive in and explore. For Axon's software engineers, integrating LLMs into Flutter presents an exciting experiment — one that's sure to be both intriguing and challenging.

While ChatGPT integrations have garnered much attention, we're shifting our focus in this article to another intriguing LLM: PaLM 2. The spotlight here is on its role in the Google Bard chatbot, as it's powered by Google Bard.

In a world of AI, let’s explore the potential of this language model.

PaLM is a large language model similar to OpenAI's GPT series or Meta's LLaMA models. Google first announced PaLM in April 2022. Like other LLMs, PaLM is a flexible system that can potentially handle a variety of text creation and editing tasks. PaLM can be a conversational chatbot like ChatGPT or perform tasks like text summarization or code writing.

Google Bard represents a state-of-the-art language model specifically crafted for dialogue-oriented applications, showcasing advanced conversational capabilities. Prior to integrating it into your business operations, it's crucial to evaluate its advantages and shortcomings comprehensively. With Google Bard, users can enjoy enhanced interactive and responsive AI-driven conversations.

The Bard API provides a versatile interface for developers to add conversational AI features to their applications. Also, leveraging the Bard AI API allows businesses to embed advanced intelligence into their platforms and improve their customer experience.

PaLM Bard leverages advanced machine learning models to provide users with intelligent text generation capabilities. The new Google Bard app offers users intuitive conversational AI for a variety of tasks.

Developers can create seamless AI-powered applications by integrating Google Bard with Flutter. Since the Flutter framework does not yet have a package or a plugin for working with PaLM 2, we will have to use the REST API (However, this is not a disadvantage since we can write a package ourselves and use it in the future).

The Google Bard API allows developers to integrate advanced natural language processing into their applications.

Let's take a look at the documentation on working with PaLM 2 REST API: https://ai.google.dev/gemini-api/docs/get-started/tutorial?lang=rest

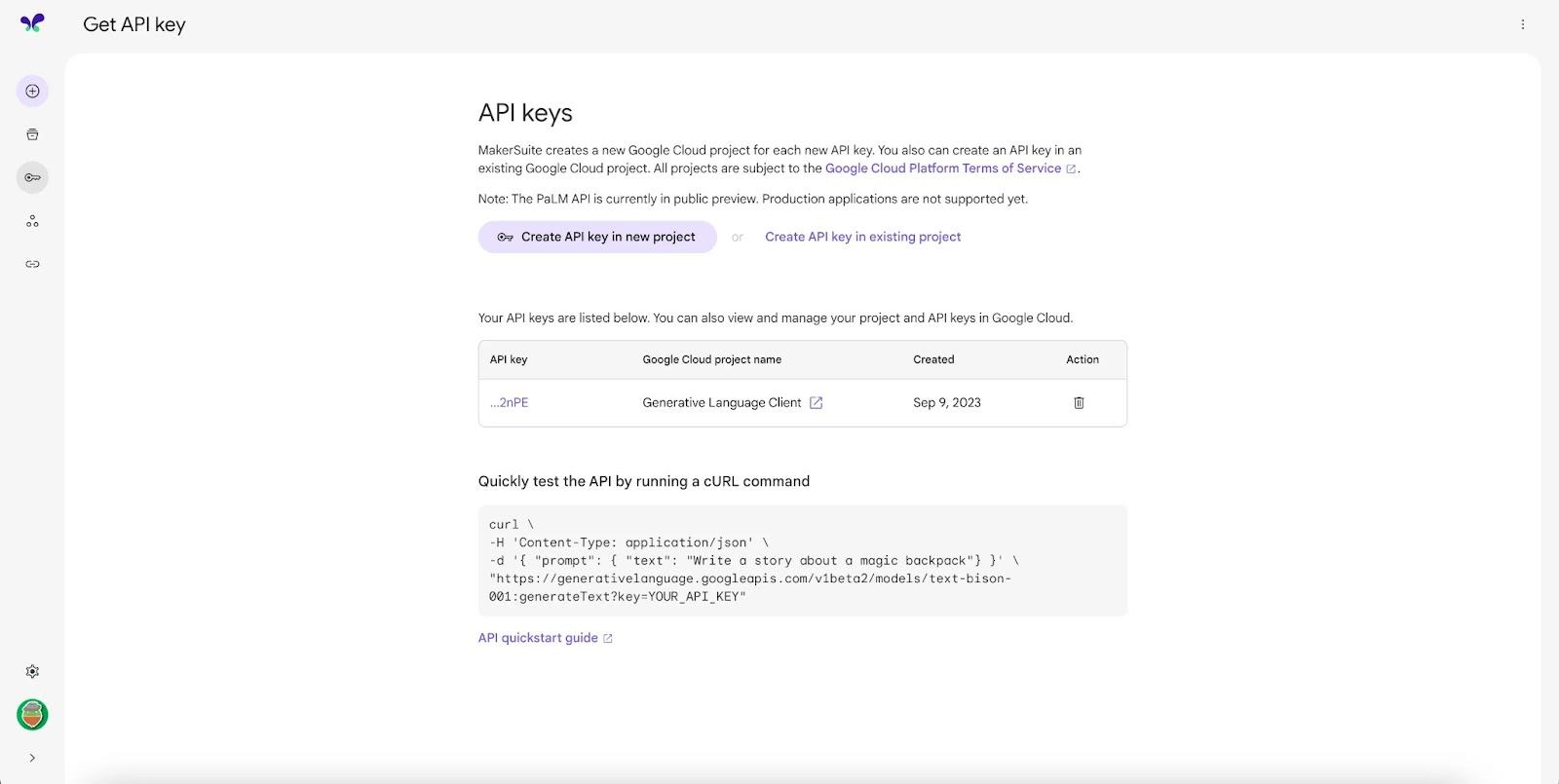

To access the Google Bard service, you need to obtain a Google Bard API key from the developer console.

Let's now create an API key. To do this, go to the MakerSuite home page and click on "Generate API Key.”

This step will generate a new API key used by your product, so let’s keep this secure and safe! Don’t publish it on a public repo; otherwise, anyone can impersonate your application!

Integrating the Flutter Bard API enables mobile applications to utilize sophisticated AI-driven dialogue systems.

Now, we can use this API. Referring to the documentation, we can see that we are provided with the API method generateText, which looks like this:

curl https://generativelanguage.googleapis.com/v1beta2/models/text-bison-001:generateText?key=$PALM_API_KEY \

-H 'Content-Type: application/json' \

-X POST \

-d '{

"prompt": {

"text": "Write a story about a magic backpack."

},

"temperature": 1.0,

"candidateCount": 2

}'You can copy this piece of code, substitute your API key, and send a curl request. Secure your access to Bard services by obtaining a Bard API key from the developer portal.

In response, you will receive the following json:

curl https://generativelanguage.googleapis.com/v1beta2/models/text-bison-001:generateText?key=$PALM_API_KEY \

-H 'Content-Type: application/json' \

-X POST \

-d '{

"prompt": {

"text": "Write a story about a magic backpack."

},

"temperature": 1.0,

"candidateCount": 2

}'Using Flutter Bard, developers can build mobile apps that leverage powerful AI features for improved user experiences.

Since PaLM 2 has several models, we can choose one of them (in the following we will use the model text-bison-001, we should pass the model name in the query line as follows: https://generativelanguage.googleapis.com/ v1beta2/models/text-bison-001:generateText

That is, if we analyze this query string, we can see that this part of the string https://generativelanguage.googleapis.com/v1beta2/ is the path to the API, this part of the string models/text-bison-001 is the name of the model, and this part of the line :generateText is the name of the method we will call.

Well, let's get down to the fun part. Bard PaLM combines Google's language model with robust interactive capabilities for advanced text-based applications.

We have already familiarized ourselves with the PaLM API, now it is time to implement it in our Flutter application. For this, we will need the following packages:

dependencies:

…

json_annotation: ^4.8.0

dio: ^5.0.1

dev_dependencies:

…

build_runner: ^2.3.3

json_serializable: ^6.6.1Our next step will be to create an HTTP client using Dio for our API service.

We will also need a data model to put our response from the API. To implement this model, we will use the "json_serializable" package.

Since, in our case, the model will only receive data and store it, we can abandon the "toJson" method. For this, let's specify the createToJson: false parameter in the @JsonSerializable() annotation, so now our model will only store data.

@JsonSerializable(createToJson: false)

class PaLMModel {

@JsonKey(name: 'candidates')

final List<OutputModel> candidates;

PaLMModel({required this.candidates});

factory PaLMModel.fromJson(Map<String, dynamic> json) => _$PaLMModelFromJson(json);

}

@JsonSerializable(createToJson: false)

class OutputModel {

@JsonKey(name: 'output')

final String output;

OutputModel({required this.output});

factory OutputModel.fromJson(Map<String, dynamic> json) => _$OutputModelFromJson(json);

}Now that we have described our data model, we should run the following command to generate our models

flutter pub run build_runner build --delete-conflicting-outputs

Well, now we have everything we need to create our service for the PaLM Api.

Now, we need to declare our abstract class in which we will describe our API methods.

abstract class IPaLMDataSource {

Future<Future<PaLMModel>> generateText(String text);

}Now, we need to implement this class by inheriting from it.

class PaLMDataSource extends IPaLMDataSource {

PaLMDataSource({required this.dioClient});

final DioClient dioClient;

…

}This class will accept the Dio client in its HTTP constructor, which we implemented in the previous steps.

Well, now we should implement our generateText method. We will do this as follows:

@override

Future<PaLMModel> generateText(String text) async {

return await dioClient.onRequest(() async {

const url = 'https://generativelanguage.googleapis.com/v1beta2/models/text-bison-001:generateText';

final queryParameters = {'key': <API_KEY>};

final body = {'prompt': {'text': text}};

final response = await dioClient.post(url, queryParameters: queryParameters, data: body);

return PaLMModel.fromJson(response.data);

});

}As we can see, this service contains one API method that accepts a string of text that will be sent in the request body. After executing this method, we will receive a result with the PaLMModel type containing our response from the PaLM API.

As you can see, implementing the PaLM Api (Google Bard) is very simple. You can use this service for your purposes by implementing business logic.

Learn more about Flutter in our recent blog post.

After we have analyzed the technical component of this technology, let’s take a look at PaLM 2 and its competitors. We will compare with LLM from the company OpenAI, that is, with ChatGPT.

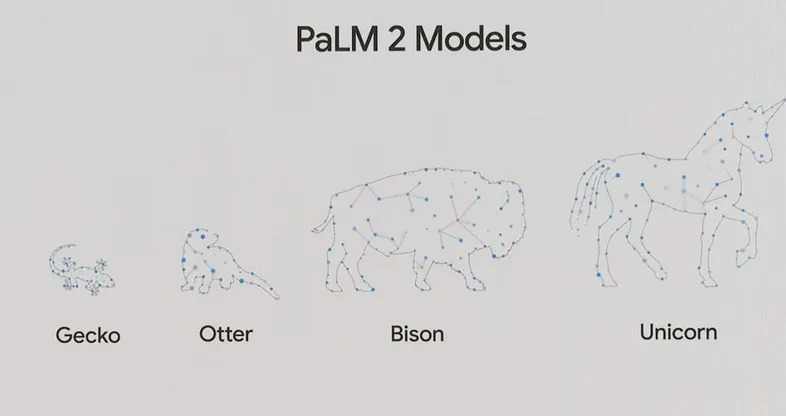

In language models, PaLM 2 stands out with its latest advancements compared to its predecessor. Notably, PaLM 2 distinguishes itself by offering smaller-sized models – Gecko, Otter, Bison, and Unicorn – catering to applications with limited computational power. These variations in size range from the petite Gecko to the robust Unicorn. Google asserts improvements in PaLM 2's reasoning abilities, particularly in challenges like WinoGrande and DROP, where it edges out GPT-4. A significant leap forward can be observed in the performance of PaLM 2, particularly in mathematical tasks, even though comparing these models directly remains challenging, as some comparisons have been omitted, possibly due to GPT-4 outperforming PaLM 2 in certain areas. In cases like MMLU and HellaSwag, GPT-4 scored higher than PaLM 2, hinting at the complexity of their competition.

Model size and training data. What sets PaLM 2 apart from its competitor GPT-4 is its model size and training data. While Google remains tight-lipped about the precise size of PaLM 2's training dataset, it mentions that the dataset is substantially more extensive. The emphasis during PaLM 2's development was placed on achieving a profound understanding of mathematics, logic, reasoning, and science. Much of its training data is dedicated to these domains. The model's pretraining corpus incorporates diverse sources such as web documents, books, code, and conversational data, resulting in substantial improvements over its predecessor, PaLM. Furthermore, PaLM 2's multilingual training, covering over 100 languages, augments its contextual comprehension and translation skills, setting it apart in the field. The Bard Google API facilitates the embedding of AI-generated conversational features in web and mobile apps.

Reinforcement learning and human feedback. GPT-4, developed by OpenAI, follows a similar approach regarding undisclosed training data size, but its focus differs. GPT-4's training goals revolve around providing multiple responses to questions and handling various ideological and conceptual perspectives. The result is a model capable of offering various answers, some of which may not align precisely with user expectations. OpenAI fine-tunes GPT-4's behavior using reinforcement learning and human feedback to align its responses with user intent. Although the exact details of each model's training data remain a mystery, it's evident that their divergent training objectives will play a significant role in shaping their real-world performance. It remains to be seen how these differences will manifest in practice.

Based on this analysis, it becomes evident that the PaLM API offers a versatile toolset for various tasks. Its utility spans from generating straightforward text for chatbots, where it can effortlessly create natural and engaging conversations, to tackling more intricate challenges, such as complex mathematical calculations. The PaLM API's adaptability allows developers and businesses to integrate it into a broad spectrum of applications, harnessing its capabilities to enhance user experiences and streamline processes.

Axon takes pride in offering cutting-edge solutions and services underpinned by agile project management methodologies. We recognize the paramount significance of precise estimations in meeting client expectations and project deadlines.

Our approach to estimations revolves around close collaboration with our clients. We understand that every project is unique, and client preferences play a crucial role in defining the scope and scale of software development initiatives. By actively engaging with our clients, we gain deep insights into their specific requirements, priorities, and budgetary constraints. Leave your contacts, and we will provide you with estimations in 24 hours.

At Axon, our software engineering company brings a wealth of experience to the table. We have worked with clients from diverse industries with unique requirements and consistently delivered robust solutions tailored to their needs. With a proven track record of successful projects, our team excels in crafting seamless and innovative Flutter applications. By integrating Bard with Flutter, developers can build rich, AI-enhanced mobile applications. Explore the unique expertise and insights our company offers to ensure a smooth and efficient development process for your Flutter apps.

Throughout the software engineering process, our team has demonstrated a well-established track record of collaboration and professionalism when working with our esteemed partners.

Our team's agility enables us to embrace change and tackle complex challenges with confidence. We approach each project with a flexible mindset, tailoring our methodologies to suit the unique requirements and goals of our clients. Through agile project management, we ensure that our solutions are scalable, maintainable, and adaptable to future needs.

Estimate the cost of your app development project for free!

In conclusion, whether you're seeking to provide your chatbot with a more human-like conversational ability or require a powerful tool for handling mathematical computations, the PaLM API stands as a promising option. Its wide-ranging applications make it a valuable asset for those in need of natural language understanding and generation combined with mathematical prowess, offering a comprehensive solution for various use cases. The Bard API Flutter integration helps developers create sophisticated AI-driven mobile applications quickly.

At Axon, we bring a wealth of knowledge and expertise to the table, ensuring that your app development is seamless and tailored to your specific needs.

So, if you're working on an app development project, don't hesitate to reach out to our team of dedicated professionals.

PaLM (Pathways Language Model) is Google’s advanced large language model that powers Google Bard, a conversational AI chatbot designed to generate human-like text responses and assist with various tasks.

Yes, you can integrate PaLM into a Flutter app by using Google’s APIs or SDKs that expose PaLM capabilities. Typically, this involves setting up authentication, sending text prompts to the API, and handling the responses within the Flutter interface.

PaLM 2 offers competitive natural language understanding and generation, with strong support from Google’s ecosystem. Compared to ChatGPT, it may provide better integration with Google services and features, but the choice depends on your specific app needs and preferred AI provider.

Free product discovery workshop to clarify your software idea, define requirements, and outline the scope of work. Request for free now.

[1]

[2]

Leave your contacts and get clear and realistic estimations in the next 24 hours.